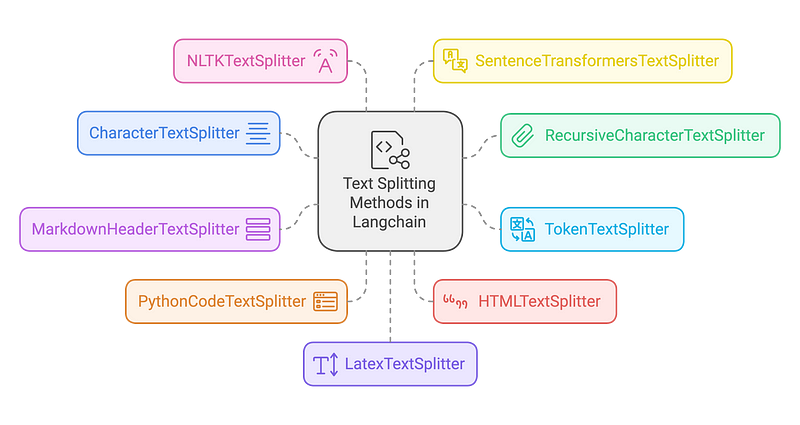

Mastering Text Splitting for Effective RAG with Langchain

Unlock the Power of Text Splitting in Langchain for Diverse Document Types and Use Cases

Introduction:

In the rapidly evolving world of Natural Language Processing (NLP), improving AI model outputs with relevant and concise information is key to enhancing user experiences. One such approach is Retrieval-Augmented Generation (RAG), where the preprocessing step of text splitting plays a pivotal role in achieving high accuracy. Text splitting divides large text into smaller, more manageable chunks, enabling AI systems to process and retrieve relevant content efficiently. Langchain provides an array of text splitting strategies, tailored for diverse document structures and NLP applications. In this article, we’ll discuss some essential text splitters in Langchain, highlight their specific use cases, and demonstrate their functionality with code samples.

1. Character-Driven Splitting: Simple Yet Effective

CharacterTextSplitter is one of Langchain’s simplest tools for dividing text into chunks based on a fixed character count. It is ideal for straightforward tasks where the document structure is uniform.

Best for:

Homogeneous documents where each chunk is relatively equal in size.

Simple scenarios where detailed control over chunking isn’t necessary.

from langchain.text_splitter import CharacterTextSplitter

text = "This is an example text that will be split into manageable chunks. Each chunk will have a maximum length defined."

splitter = CharacterTextSplitter(

separator=" ",

chunk_size=500, # 500 characters per chunk

chunk_overlap=100 # 100 characters overlap

)

chunks = splitter.split_text(text)

print(chunks)Here, the splitter creates chunks of 1000 characters, ensuring a 200-character overlap, using double newlines as a separator to avoid splitting mid-paragraphs.

2. Flexibility in Chunking with Recursive Splitting

When more flexibility is required, RecursiveCharacterTextSplitter comes in handy. It allows splitting based on multiple separator patterns, progressively refining the chunking logic.

Best for:

Handling documents with varied structures.

Scenarios requiring optimal chunking based on specific delimiters.

from langchain.text_splitter import RecursiveCharacterTextSplitter

text = "This is a document with different types of delimiters. We will use recursive splitting to handle it."

splitter = RecursiveCharacterTextSplitter(

separators=["\n\n", "\n", " ", ""], # Multiple delimiters to ensure efficient chunking

chunk_size=400, # Chunk size adjusted for flexibility

chunk_overlap=50 # Overlap of 50 characters for maintaining context

)

chunks = splitter.split_text(text)

print(chunks)This splitter works by first trying to split the text using double newlines, then falling back to single newlines, spaces, and finally individual characters if necessary.

3. Token-Based Chunking for Model-Sensitive Tasks

For language models that require processing based on token limits, TokenTextSplitter is the ideal solution. It ensures that the chunk sizes are optimized for models like GPT-3, which have token limits that can impact processing.

Best for:

Ensuring chunks fit within token constraints for language models.

Handling documents where token limits are crucial.

from langchain.text_splitter import TokenTextSplitter

text = "This document is being split based on token limits."

splitter = TokenTextSplitter(

encoding_name="cl100k_base",

chunk_size=80, # Split text into chunks of 80 tokens

chunk_overlap=20 # 20-token overlap for context

)

chunks = splitter.split_text(text)

print(chunks)This splitter operates based on tokens rather than characters, crucial for high-performance models that require precise token management.

4. Splitting Based on Markdown Headers

MarkdownHeaderTextSplitter is perfect for splitting Markdown documents while maintaining their structural integrity. This method respects headers and organizes chunks based on the document hierarchy.

Best for:

Markdown documentation.

Projects where content must be divided based on sections, subsections, or headers.

from langchain.text_splitter import MarkdownHeaderTextSplitter

markdown_text = """

# Title

## Section 1

This is some content.

## Section 2

More content here.

"""

splitter = MarkdownHeaderTextSplitter(

headers_to_split_on=[("#", "Header 1"), ("##", "Header 2")]

)

chunks = splitter.split_text(markdown_text)

print(chunks)This splitter uses the Markdown header hierarchy to create logically coherent text chunks, which is vital for maintaining a clean structure in large Markdown files.

5. Optimizing Code-Based Splitting

The PythonCodeTextSplitter is optimized for Python code documents, splitting text while respecting programming structures such as functions and classes. This method ensures the integrity of the code structure while facilitating easier analysis or documentation.

Best for:

Python codebases.

Preserving the structure of code while splitting for analysis or documentation.

from langchain.text_splitter import PythonCodeTextSplitter

python_code = """

def example_function():

print("This is an example.")

class ExampleClass:

def __init__(self):

self.val = 10

"""

splitter = PythonCodeTextSplitter(

chunk_size=100,

chunk_overlap=20

)

chunks = splitter.split_text(python_code)

print(chunks)This approach ensures that code splits are done logically, respecting function or class boundaries for clearer, more structured output.

6. Web Content Parsing with HTMLTextSplitter

For web scraping or processing HTML content, HTMLTextSplitter is designed to handle the structure of HTML documents. It preserves the context of HTML tags, which is essential for proper content extraction or analysis.

Best for:

HTML web pages.

Projects where the HTML structure must be maintained for proper analysis.

from langchain.text_splitter import HTMLTextSplitter

html_text = """

<html>

<head><title>Example</title></head>

<body>

<h1>Main Title</h1>

<p>Paragraph 1</p>

</body>

</html>

"""

splitter = HTMLTextSplitter(

chunk_size=100,

chunk_overlap=20

)

chunks = splitter.split_text(html_text)

print(chunks)This splitter works to preserve the integrity of HTML tags while breaking down content into manageable pieces.

7. Advanced Linguistic Splitting with NLTK

For those looking for text segmentation based on linguistic principles, NLTKTextSplitter utilizes the Natural Language Toolkit (NLTK) to split text based on sentence or paragraph boundaries.

Best for:

Well-structured, linguistically rich text.

Scenarios where sentence or paragraph integrity is important.

from langchain.text_splitter import NLTKTextSplitter

text = "This is a document. It contains multiple sentences. Each sentence is split separately."

splitter = NLTKTextSplitter(

chunk_size=150,

chunk_overlap=30

)

chunks = splitter.split_text(text)

print(chunks)This method helps maintain linguistic structures, ensuring each chunk makes sense in the context of natural language.

8. Semantic Splitting with Sentence Embeddings

SentenceTransformersTextSplitter splits text based on sentence embeddings, ensuring that chunks retain semantic coherence, which is ideal for tasks requiring understanding of meaning across chunks.

Best for:

Tasks requiring semantic chunking.

Projects that depend on preserving context and meaning in each chunk.

from langchain.text_splitter import SentenceTransformersTextSplitter

text = "This is an example text. We aim to split it based on semantic meaning."

splitter = SentenceTransformersTextSplitter(

chunk_size=100,

chunk_overlap=20

)

chunks = splitter.split_text(text)

print(chunks)This method guarantees that each chunk is semantically coherent, which is essential for higher-level NLP tasks.

9. LaTeX Document Processing

For academic or scientific documents written in LaTeX, LatexTextSplitter is designed to split text while respecting LaTeX-specific commands and environments.

Best for:

LaTeX documents in academia or scientific research.

Maintaining LaTeX formatting and structure in processing.

from langchain.text_splitter import LatexTextSplitter

latex_text = r"""

\documentclass{article}

\begin{document}

\section{Introduction}

This is an introduction section with some text.

\section{Methodology}

This section discusses the methods used for processing the data.

\section{Conclusion}

The conclusion summarizes the findings.

\end{document}

"""

splitter = LatexTextSplitter(

chunk_size=100, # Adjust chunk size as needed

chunk_overlap=20 # Adjust overlap for context retention

)

chunks = splitter.split_text(latex_text)

print(chunks)This approach helps to split LaTeX files while keeping the formatting and structure intact for more accurate document processing.

Conclusion

Selecting the right text splitting method for your RAG pipeline is crucial for improving model performance and accuracy. Langchain offers several specialized splitters, each tailored to specific document types and use cases. Whether you’re working with plain text, code, Markdown, HTML, or LaTeX, Langchain provides flexible solutions that preserve the structure and meaning of your data.

By understanding the strengths and limitations of each text splitter, you can optimize your document processing and create more efficient RAG applications. Experiment with different techniques, tweak parameters to suit your project needs, and ensure that your AI-powered applications are as effective and precise as possible.

Learn and Grow with Hidevs:

• Stay Updated: Dive into expert tutorials and insights on our YouTube Channel.

• Explore Solutions: Discover innovative AI tools and resources at www.hidevs.xyz.

• Join the Community: Connect with us on LinkedIn, Discord, and our WhatsApp Group.

Innovating the future, one breakthrough at a time.